Testing software effectively is crucial for delivering high-quality applications. However, as codebases grow in size and complexity, manually testing all possible use cases becomes infeasible. This results in limited test coverage – a key measure of how thoroughly a system has been tested.

Low test coverage leads to undiscovered defects and reliable software. To maximize test coverage, teams are adopting parallel execution in test automation frameworks like Selenium Python.

Why Test Coverage Matters

Test coverage refers to the percentage of code or use cases covered by test suites. High test coverage ensures comprehensive testing, giving teams confidence in shipping stable, bug-free releases.

However, achieving high coverage manually is challenging. Factors like time constraints, complex workflows, and large test data sets limit manual testing. Even with automated testing, long-running sequential test suites throttle coverage.

This creates gaps in testing. Industry research indicates the average coverage achieved manually ranges from 30-70%. However, coverage above 90% is recommended for enterprise-grade applications.

Parallel testing helps address this gap. By distributing tests across multiple resources simultaneously, teams can expand coverage in the same testing cycles.

Overcoming Coverage Challenges with Parallel Execution

Parallel execution distributes test suites across multiple CPUs and machines to run concurrently. This contrasts with sequential execution that runs tests serially on a single resource.

Let’s understand how parallel testing helps boost coverage:

Faster Test Cycles

By utilizing multiple resources simultaneously, parallel testing cuts test execution time significantly. Teams can pack more tests in the same cycles without compromising speed or stability.

For example, Selenium Python tests running sequentially on a 4 core machine take 2 hours. The same suite leveraging parallel resources finishes under 30 minutes – cutting cycle time by 75%!

Increased Volume and Scope

Parallel testing facilitates running more high-volume, long-running, and complex integration tests cost-effectively.

Expensive subsystem tests like large-scale database checks can expand scope. Teams can mimic real-world environments more accurately by parameterizing parallel runs with diverse test data sets.

Reduced Choke Points

Common test bottlenecks like desktop browser testing form choke points limiting coverage. With parallel execution, browser tests scale seamlessly leveraging cloud infrastructure.

Teams can run hundreds of browser test suites in parallel, allowing for more exploratory and usability testing. This exposes critical edge cases that evade standard test scenarios.

Configuring Your Test Environment

Leveraging parallel execution requires configuring your testing infrastructure and frameworks appropriately:

Hardware and Cloud Resources

To utilize parallelism effectively, provision adequate hardware or leverage cloud elasticity for dynamic scaling:

On-premise hardware: Use multi-core CPUs, high memory configurations, distributed testing labs.

Cloud resources: Cloud build agents give unlimited parallel capacity to scale tests exponentially.

Test Runners and Reporting Tools

Popular Python test runners like PyTest and Unittest power parallel execution using built-in features or plugins:

PyTest has native parallel constructs using pytest-xdist and supports distributing tests across nodes for scalability.

Unittest requires external runners like nose2 to enable parallel execution across processes and threads.

Allure and ExtentReports aid debugging and analysis by collating results across parallel runs into shareable reports.

Writing Effective Parallel Test Cases

Efficient test case design is critical before enabling parallelization. Keep these guidelines in mind:

Modular Tests

Break suite into self-contained units that can run independently without shared state or data dependencies.

# Testing payment workflows

test_add_new_payment_method()

test_edit_payment_method()

test_delete_payment_method()

Isolated Fixtures

Separate test fixtures and data setup from execution logic for independence across test classes:

# Invalid test fixture

@pytest.fixture

def app_data():

# DB setup done before each test case

# Causes concurrency issues in parallel

# Improved approach

@pytest.fixture(scope="module")

def db_setup():

# DB setup performed only once for the module

Careful Parameterization

Parameterize data variations in tests without baking in environment specifics for portability:

@pytest.mark.parametrize("browser", ["chrome", "firefox"])

def test_login(browser):

print(f"Testing login on {browser}")

This allows the same test logic to apply across parameters in parallel runs.

Executing Tests in Parallel

Let’s go through a sample workflow for running Selenium Python tests in parallel:

1. Install PyTest and PyTest-Xdist Plugins

Install PyTest test runner and xdist plugin to enable distributed testing:

pip install pytest pytest-xdist

2. Configure Parallelism in Config File

Specify parallel execution in pytest.ini or tox.ini config files:

[pytest]

addopts = -n 4 # Use 4 worker processes

3. Run Tests with PyTest

Trigger PyTest to distribute tests across multiple workers using the -n flag:

pytest -v test_module.py -n 8

Tests split across 8 worker processes for parallel execution!

4. Analyze Results

The PyTest output consolidates results, failures, and logs across all parallel runs for easy analysis.

Detailed HTML reports can also be generated for debugging failing tests.

Choosing Python Test Frameworks for Parallelism

While PyTest has great parallel support, other Python testing frameworks have direct or plugin-based parallel capabilities:

| Framework | Parallel Features | Pros | Cons |

|---|---|---|---|

| PyTest | Native parallel execution, distributed testing | Simple syntax, seamless scaling | Customization can get complex |

| Unittest | Nose2 and plugins enable parallelism | Mature ecosystem integration, easy debugging | Significant configuration overhead |

| Robot Framework | Supports distributed and remote test execution | Simplified test scripts, CI/CD integration | Limited debugging capabilities |

| Locust | Specialized for load and scalability testing | Easy cloud scalability, GUI reporting | Not designed for functional testing |

Based on project needs, choose frameworks balancing ease of use with customization flexibility.

Handling Shared Test Data

With parallel runs relying on test data, handling shared state and dependencies is necessary:

Segregate Data Setup

Perform suite-level data setup using fixtures only once to avoid concurrency issues:

@pytest.fixture(scope="session")

def db_data():

# Database setup executed once before parallel tests

Test cases consume data without worrying about integrity.

Parameterize Unique Values

Generate input variations programmatically instead of hardcoding:

@pytest.mark.parametrize("user_id", generate_user_ids())

def test_edit_profile(user_id):

# Edit randomized profile in each parallel run

This avoids collisions across parallel test runs.

Reset State Between Tests

Clear residual test state before next parallel run:

def test_checkout_order():

# Execute test logic

# Reset order state for next iteration

orders.delete(order_id)

Debugging Parallel Tests

While parallel testing improves velocity, test failures become harder to reproduce due to added variables. Apply these debugging practices:

Log Extensively

Enrich test output with start, end timestamps, and process IDs:

start_time = datetime.now()

print(f"{start_time} STARTED. Worker PID: {os.getpid()}")

# Test logic

end_time = datetime.now()

print(f"{end_time} ENDED. Worker PID: {os.getpid()}")

Cross-reference timestamps across parallel processes when debugging.

Simulate Locally

Replicate integration test environments and run subsets of failing suites locally with printed logs:

pytest tests/test_checkout.py -n 4 -s

The -s prints std output, while -n simulates parallelism.

Visualize Results

Use reporting dashboards in tools like Allure, and ExtentReports to visually pinpoint failures and correlate logs:

Interactive reports help easily analyze parallel test runs.

Real-World Success Stories

Let’s highlight some real-world case studies that have leveraged parallel execution successfully:

Cloud Software Provider Qentinel

Qentinel adopted parallel testing to accelerate test cycles for their cloud platforms from 4 hours to just 22 minutes by utilizing their own Parelax platform.

This improved developer productivity through rapid validation cycles.

Careem’s Ride-Hailing Application

Careem’s engineering teams run 2500+ parallel browser tests leveraging Selenium Grid on AWS devices every night, catching regressions through continuous testing.

By shift-left testing into dev sprints, they release reliably at a rapid weekly cadence.

Fanatics’ Sports E-Commerce Site

Sports platform Fanatics uses PyTest-based parallel testing capable of executing 4500 Selenium tests in under 15 minutes by spinning up containers programmatically.

Parallel testing unblocked their CI/CD pipelines allowing rapid production releases.

Through these examples, we see the scale achieved across e-commerce, ride-sharing and SaaS domains using well-architected parallel testing.

Integrating with CI/CD Pipelines

To reap benefits, parallel test execution should integrate tightly within CI/CD pipelines:

Triggering Test Jobs

Use CI orchestrators like Jenkins, CircleCI, and TravisCI to trigger parallel test runs on code changes:

# .circleci/config.yml

parallelism: 8 # CircleCI will split across 8 containers

Automated Reporting

Publish test results on CI dashboards for tracking:

# Send Allure test reports

store_artifacts:

path: allure-report

destination: allure

Provide historically comparable reporting through pipeline runs.

Just-In-Time Scaling

Leverage auto-scaling test environments to handle CI load:

pipelines:

test:

environment+:

- Selenium-Grid = 2

Provision additional browsers dynamically based on pending test queues.

Scaling Parallel Execution

While parallel testing boosts velocity, scaling tests optimally requires some strategy:

Dynamic Worker Pools

Adapt worker count programmatically based on suite size and complexity:

test_count = len(tests)

if test_count > 500:

worker_count = 16

elif test_count > 100:

worker_count = 8

Multi-Stage Parallelism

Group test targets across different stages for cascading parallelism:

CI pipeline

|

|

Parallel Stage 1

| |

Tests Tests

| |

Parallel Stage 2

| |

Tests Tests

Tests split across multiple waves to constrain complexity.

Resource Monitoring

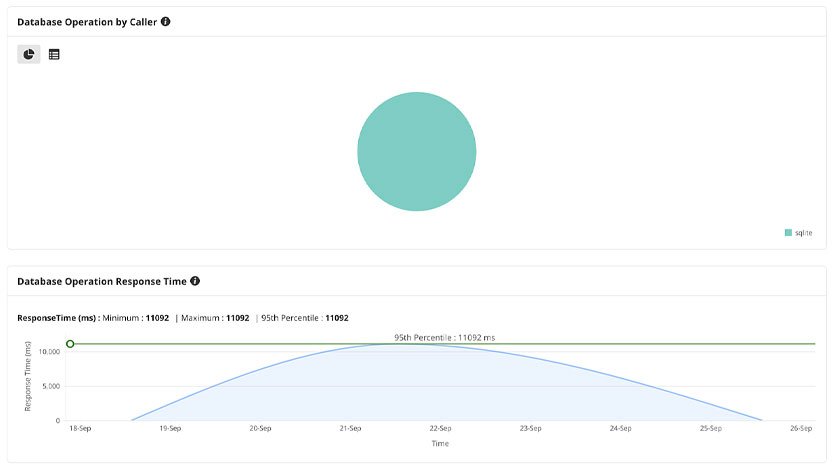

Monitor system utilization in parallel environments to prevent resource saturation:

Fine-tune runs based on live resource consumption.

The Future of Parallel Testing

As test automation matures, some interesting trends around parallel testing are emerging:

Smart test grouping: ML techniques like test clustering analyze test execution history and runtime patterns to group compatible tests for optimized parallel scheduling.

Predictive auto-scaling: Leveraging neural nets, test platforms can forecast resourcing needs for upcoming test runs and trigger provisioning automatically.

Unified data testing: Shared data pools allow tests from disparate workflows to access consistent test data dependency-free. Frameworks manage concurrency and isolation implicitly.

These innovations will enable teams to focus on test coverage rather than just execution orchestration.

Supercharge Test Coverage with Parallel Execution

This brings us to the end of our detailed guide on implementing parallel test execution with Selenium Python. The key takeaways covered include:

Challenges in maximizing test coverage and how parallel testing addresses common bottlenecks for automation engineers.

Configuration guidelines for setting up the test environment, runners, test data and frameworks before enabling parallel execution.

Implementation techniques for executing parallel Selenium tests leveraging PyTest while supporting scale and debugging needs.

Reporting solutions and real-world case studies proving the commercial impact of parallel automated testing.

Emerging trends that will further evolve parallel testing in future through intelligent automation and unrestricted data access.

By adopting parallel test execution techniques, test automation teams can overcome velocity challenges and achieve the coveted 90%+ coverage metrics consistently.

This manifests in shorter feedback cycles between test, development and QA – enabling both faster feature delivery along with reliable, resilient software across enterprise systems.

FAQs

What are the key benefits of parallel testing?

The main benefits are faster test cycles, increased test volume and scope, reduced bottlenecks, and higher test coverage. By using multiple resources simultaneously, parallel testing enables running more tests in shorter durations without comprising stability.

How is parallel testing different from sequential testing?

In sequential execution, automated test cases run one after the other on a single machine. Parallel execution distributes tests across multiple CPUs and machines concurrently for much faster execution.

What kind of tests are best suited for parallelization?

Modular, independent test cases with no dependencies or shared state work optimally in parallel environments. Tests that require substantial contextual setup are harder to parallelize.

What test runners support parallel test execution?

PyTest has excellent native support for parallel testing in Python through pytest-xdist. Other Python runners like Unittest and Nose2 require additional plugins and libraries to enable parallelism.

How can I leverage parallel testing on cloud infrastructure?

Cloud providers make it easy to spin up distributed test infrastructure programmatically. Running parallel Selenium Grid nodes on AWS Lambda or CircleCI parallel containers allow infinitely scalable test execution.

What reporting tools can help debug parallel test failures?

Allure and ExtentReports provide consolidated reporting dashboards collating results across parallel test runs for easy analysis. Their logs and screenshots aid in isolating test failures occur inconsistently.

How can I optimize scaling for large test suites?

Optimal scaling requires balancing suite size with available parallel resources. Strategies like multi-stage workflows, dynamic worker pools and run-time monitoring help scale efficiently.